1.修改nginx.conf为abc:root启动

user abc abc; #以普通用户启,但实际上是master为root,worker进程是abc。

或

user abc root;

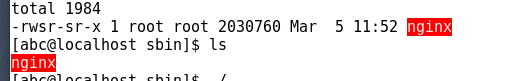

2.修改nginx

chown root nginx #nginx必须是root用户拥有者。

chmod a+s nginx #把x属性改为s属性。

ll nginx

-rwsr-sr-x 1 root root 2030760 Mar 5 11:52 nginx

1.修改nginx.conf为abc:root启动

user abc abc; #以普通用户启,但实际上是master为root,worker进程是abc。

或

user abc root;

2.修改nginx

chown root nginx #nginx必须是root用户拥有者。

chmod a+s nginx #把x属性改为s属性。

ll nginx

-rwsr-sr-x 1 root root 2030760 Mar 5 11:52 nginx

vim /etc/docker/daemon.json

切换镜像源,如果仍然失败或提示其它异常,可考虑调用如下命令清理缓存。

docker system prune -a -f

以下是公开的:在K8S中,无法完成安装,通常是镜像下载失败,则可以调整一下以下的顺序,确保它可以完成。

{“registry-mirrors”: [“http://f1361db2.m.daocloud.io”,”https://mirror.ccs.tencentyun.com”,”https://registry.cn-hangzhou.aliyuncs.com”]}

以下是我本人的:

https://cr.console.aliyun.com/cn-hangzhou/instances/mirrors

{

“bip”:”192.168.55.1/24″,

“registry-mirrors”: [“https://2na48vbddcw.mirror.aliyuncs.com”]

}

把我常用的字母移除到只有8个字母。

sudo systemctl daemon-reload

sudo systemctl restart docker

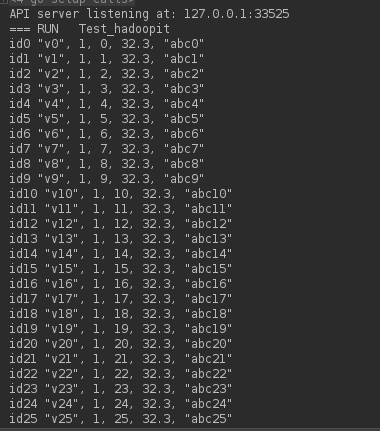

sequencefile是一组Key和Value的健值对。在实际中HIVE创建表时,key是没有无意义的。它只根据value的格式进行切换数据。

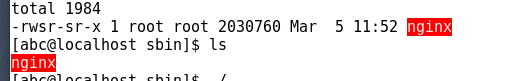

0.登录容器并连接上hive

docker-compose -f docker-compose-hive.yml exec hive-server bash

/opt/hive/bin/beeline -u jdbc:hive2://localhost:10000

1.建表

create external table sfgz(

`idx` string,

`userid` string,

`flag` string,

`count` string,

`value` string,

`memo` string)

partitioned by (dt string)

row format delimited fields terminated by ','

stored as sequencefile

location '/user/sfgz';

2.分区加载

方法一:

hadoop fs -mkdir -p /user/sfgz/dt=2010-05-06/

hadoop fs -put /tools/mytest.txt.sf /user/sfgz/dt=2019-05-17

hadoop fs -put /tools/mytest.txt.sf /user/sfgz/dt=2010-05-04

这样是无法直接被hive所识别的,必须用alter table partition的命令把相应的分区表加入至数据库中,才能正常访问。

方法二,加载完就可以直接查询的:

load data local inpath ‘/tools/mytest.txt.sf’ into table sfgz partition(dt=’2009-03-01′);这种方法是可以直接查询了。

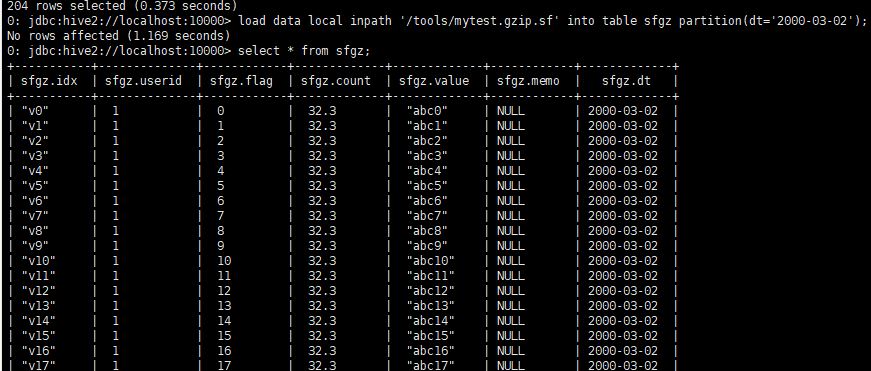

load data local inpath ‘/tools/mytest.gzip.sf’ into table sfgz partition(dt=’2000-03-02′);

3. 检查分区信息:

show partitions sfgz;

4. 添加分区

alter table sfgz add partition(dt=’2000-03-03′);

5. 插入一条记录:

insert into sfgz partition(dt='2019-05-16')values('idx3','uid6','5','6','34.7','uid3test2');

6. 统计指令:

select count(*) from sfgz; 在KMR中不支持这种方式。

select count(idx) from sfgz; 在KMR中只支持这种方式。

6. 其它常见命令

show databases;

use database;

whow tables;

select * from sfgz where dt=’2000-03-03′;

msck repair table sfgz; 分区修复指令:

1.下载docker镜像库:https://github.com/big-data-europe/docker-hive.git,并安装它。

2.修改其docker-compose.yml文件,为每个容器增加上映射。

version: "3"

services:

namenode:

image: bde2020/hadoop-namenode:2.0.0-hadoop2.7.4-java8

volumes:

- /data/namenode:/hadoop/dfs/name

- /data/tools:/tools

environment:

- CLUSTER_NAME=test

env_file:

- ./hadoop-hive.env

ports:

- "50070:50070"

datanode:

image: bde2020/hadoop-datanode:2.0.0-hadoop2.7.4-java8

volumes:

- /data/datanode:/hadoop/dfs/data

- /data/tools:/tools

env_file:

- ./hadoop-hive.env

environment:

SERVICE_PRECONDITION: "namenode:50070"

ports:

- "50075:50075"

hive-server:

image: bde2020/hive:2.3.2-postgresql-metastore

volumes:

- /data/tools:/tools

env_file:

- ./hadoop-hive.env

environment:

HIVE_CORE_CONF_javax_jdo_option_ConnectionURL: "jdbc:postgresql://hive-metastore/metastore"

SERVICE_PRECONDITION: "hive-metastore:9083"

ports:

- "10000:10000"

hive-metastore:

image: bde2020/hive:2.3.2-postgresql-metastore

volumes:

- /data/tools:/tools

env_file:

- ./hadoop-hive.env

command: /opt/hive/bin/hive --service metastore

environment:

SERVICE_PRECONDITION: "namenode:50070 datanode:50075 hive-metastore-postgresql:5432"

ports:

- "9083:9083"

hive-metastore-postgresql:

image: bde2020/hive-metastore-postgresql:2.3.0

volumes:

- /data/tools:/tools

presto-coordinator:

image: shawnzhu/prestodb:0.181

volumes:

- /data/tools:/tools

ports:

- "8080:8080"

2.创建测试文本

1,xiaoming,book-TV-code,beijing:chaoyang-shagnhai:pudong

2,lilei,book-code,nanjing:jiangning-taiwan:taibei

3,lihua,music-book,heilongjiang:haerbin

3,lihua,music-book,heilongjiang2:haerbin2

3,lihua,music-book,heilongjiang3:haerbin3

3.启动并连接HIVE服务。

docker-compose up -d

docker-compose exec hive-server bash

/opt/hive/bin/beeline -u jdbc:hive2://localhost:10000

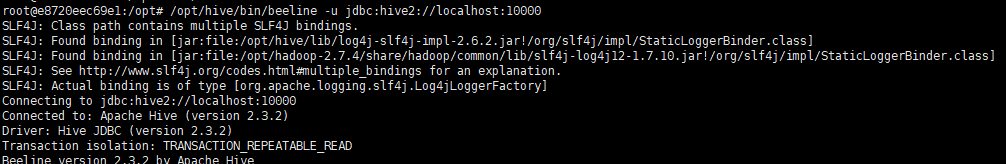

4.创建外部表

create external table t2(

id int

,name string

,hobby array

,add map

)

row format delimited

fields terminated by ','

collection items terminated by '-'

map keys terminated by ':'

location '/user/t2'

5.文件上传到上步骤中的目录内。

方法1:在HIVE的beeline终端中采用:

load data local inpath ‘/tools/example.txt’ overwrite into table t2; 删除已经存在的所有文件,然后写入新的文件。

load data local inpath ‘/tools/example.txt’ into table t2; 在目录中加入新的文件【差异在overwrite】。

方法2:用hadoop fs -put的文件上传功能。

hadoop fs -put /tools/example.txt /user/t2 文件名不改变。

hadoop fs -put /tools/example.txt /user/t2/1.txt 文件名为1.txt

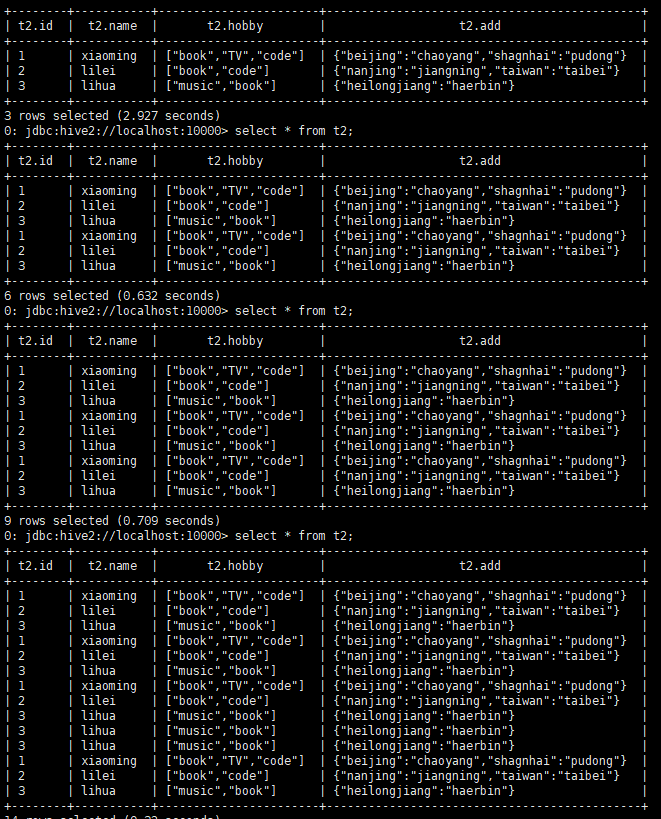

6.在HIVE命令行中验证

select * from t2; 上传一次文件,执行一次。

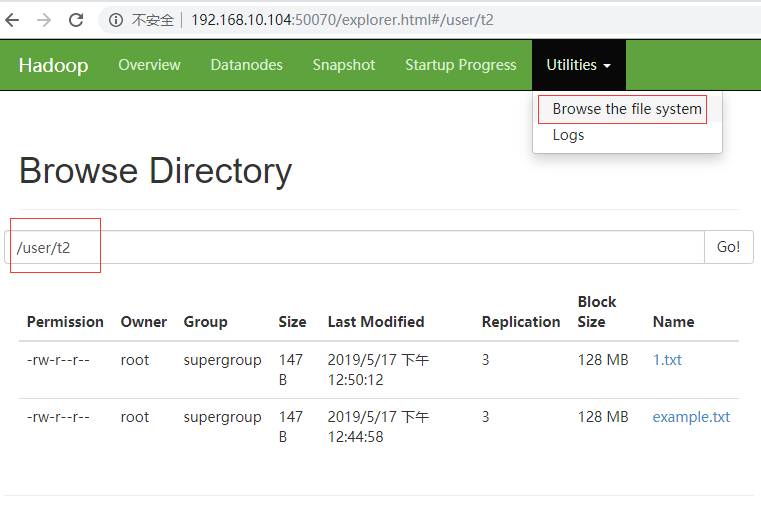

7.在hadoop的文件管理器,也可以浏览到新上传的文件。

同一个文件中的记录是会自动作去重处理的。

——————————————-

如果是sequencefile呢?

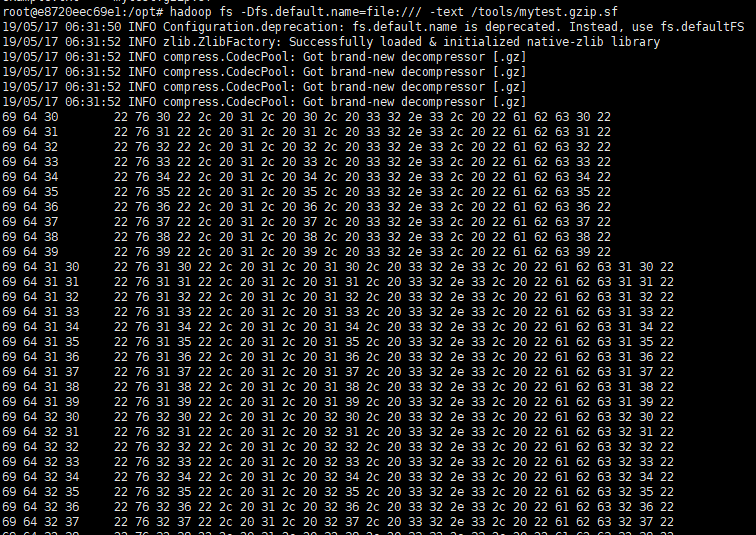

1.检验sequencefile的内容。

hadoop fs -Dfs.default.name=file:/// -text /tools/mytest.gzip.sf 废弃的

hadoop fs -Dfs.defaultFS=file:/// -text /tools/mytest.txt.sf

实际内容是:

2.建表

create external table sfgz(

`idx` string,

`userid` string,

`flag` string,

`count` string,

`value` string,

`memo` string)

partitioned by (dt string)

row format delimited fields terminated by ','

stored as sequencefile

location '/user/sfgz';

3.上传文件

方法一:

hadoop fs -mkdir -p /user/sfgz/dt=2010-05-06/

hadoop fs -put /tools/mytest.txt.sf /user/sfgz/dt=2019-05-17

hadoop fs -put /tools/mytest.txt.sf /user/sfgz/dt=2010-05-04

这种方法,还需要人为Reload一下才行,其reload指令是:

方法二:

load data local inpath '/tools/mytest.txt.sf' into table sfgz partition(dt='2009-03-01');这种方法是可以直接查询了。

load data local inpath '/tools/mytest.gzip.sf' into table sfgz partition(dt='2000-03-02');

Big Data Europe

目前最靠谱的样板

https://github.com/big-data-europe/docker-spark

https://github.com/big-data-europe/docker-hive

https://github.com/big-data-europe

HIVE文档

https://cwiki.apache.org/confluence/display/Hive/Home#Home-UserDocumentation

七牛日志格式

116.231.10.133 HIT 0 [07/May/2019:09:04:56 +0800] "GET http://wdl1.cache.wps.cn/per-plugin/dl/addons/pool/win-i386/wpsminisite_3.0.0.37.7z HTTP/1.1" 206 66372 "-" "-"

Qt的相关代码

int getDownloadSize(QString line) {

int idx = line.indexOf("HTTP/1.1\"");

if(idx > 0) {

QString sub = line.mid(idx);

QStringList sets = sub.split(" ");

int size = sets.at(2).toInt();

return size;

}

return 0;

}

int main(int argc, char *argv[])

{

QCoreApplication a(argc, argv);

QString dpath("F:\\日志0508\\18");

QDir d(dpath);

QStringList fs = d.entryList();

qint64 plug_total = 1;

qint64 other_total = 1;

for(int i = 0; i < fs.size(); i++) {

QString f = fs.at(i);

QFile file(dpath+"\\"+f);

if(!file.open(QIODevice::ReadOnly|QIODevice::Text)) {

continue;

}

int linecnt = 0;

while(!file.atEnd()) {

QByteArray line = file.readLine();

if(line.indexOf("wdl1.cache.wps.cn") < 0) {

continue;

}

int idx = line.indexOf("win-i386");

int size = getDownloadSize(line);

qint64 skidx = file.pos();

if(idx > 0) {

plug_total += size;

}else{

other_total += size;

}

linecnt++;

if(linecnt % 10000 == 0) {

printf("\r\nlinecnt:%d - skidx:%lld - plug_total:%lld - other_total:%lld - ratio:%5f", linecnt, skidx, plug_total, other_total, double(plug_total) / double(other_total));

}

}

printf("\r\nlinecnt:%d - plug_total:%lld - other_total:%lld - ratio:%5f", linecnt, plug_total, other_total, double(plug_total) / double(other_total));

}

printf("\r\nplug_total:%lld - other_total:%lld - ratio:%5f", plug_total, other_total, double(plug_total) / double(other_total));

return a.exec();

}